Friday, Oct. 14, was an eventful day for me. It started with a meeting with Secretary of State Antony Blinken, and it ended with death threats.

I was one of a small group of Iranian American women invited to meet with top State Department officials to discuss the female-led protest movement that is gathering strength in Iran. Beforehand, the secretary’s office asked for our consent to have news organizations present while the secretary made a statement. Not anticipating any harm, I said yes.

Unfortunately, I was mistaken. Over the following week, I learned firsthand how the policy and design decisions of social media platforms, such as Instagram, directly affect users’ personal safety.

Soon after photos from the State Department meeting appeared in the news, my Twitter mentions — normally nonexistent, because I barely use Twitter — ratcheted up. I saw tweets containing misinformation, hate, harassment, violence and vile insults. That night, the attacks spread to Instagram. The next morning, I woke to a slew of message requests to my private Instagram account. Alongside hateful and harassing messages were death threats.

As someone who readily introduces herself as a “policy nerd” — certainly not a public figure — I was stunned.

Not knowing how to deal with the death threats, I followed the platform’s user interface, hoping it would guide me to appropriately respond. Instagram gives three options at the bottom of message requests: block, delete or accept.

Clearly, “block” was my only viable option. It would make no sense to “accept” the hate and threats, and to “delete” without blocking would allow these criminals (or bots) to return. No, thank you.

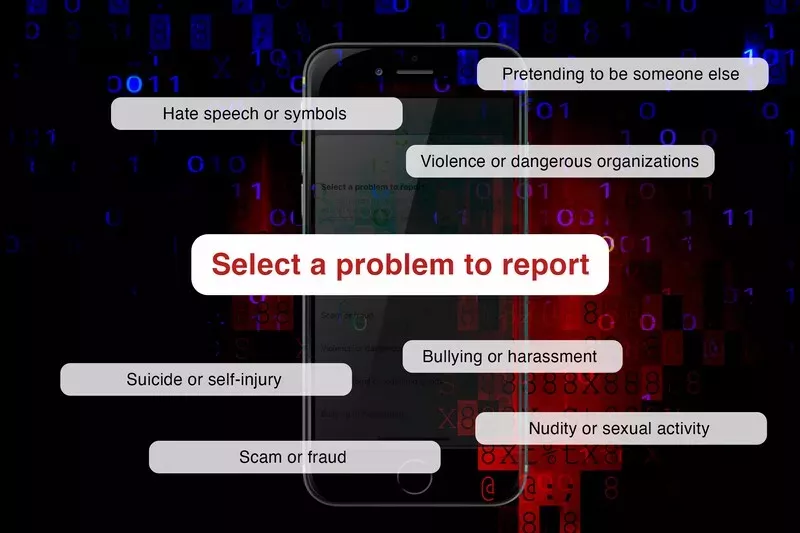

Tapping “block” brings up a menu with options to “ignore”, “block account” or “report”. Tapping “report” leads to a new menu, which says: “Select a problem to report”.

Here, I ran into a new problem (atop the death threats): None of the reporting categories sufficiently captures the severity of a death threat.

So, I reported all the messages under the two closest options: “violence or dangerous organizations” and “bullying or harassment”. Then, I waited. Two days later, despite reaching out to a friend who works at Meta and accepting her offer to submit an internal escalation, no response arrived. The threatening message requests kept coming. Finally, a private security professional showed me how to change my Instagram settings to stop message requests.

It’s worth noting that in many places, local police lack jurisdiction in cases of threats made on social media platforms. When my local officers heard that the death threats had come via Instagram, they told me they had no jurisdiction in the matter, instructed me to contact Meta and hung up.

By Wednesday — four days later — I’d still heard nothing from anyone at Meta who wasn’t my friend or a friend of a friend. Coincidentally, Nick Clegg, Meta’s global affairs president, was speaking that Friday at the Council on Foreign Relations (CFR), of which I am a member. So, by a combination of coincidence, privilege and some courage, I had the opportunity to ask Clegg why Meta doesn’t have a “death threat” option in its reporting process.

I am grateful that he took my question seriously. He seemed genuinely sorry and at a loss for what to say or do, and his team quickly followed up. Yet, the larger problem with the reporting process should be solved at scale.

There are at least three ways that Meta’s approach and choice architecture could be redesigned to better uphold its community guidelines and ensure greater user safety: First, add a “death threat” option to the reportable problem categories. For example, Twitter’s reporting process includes a category specifying “threatening me with violence”. In most jurisdictions, a death threat is a criminal offense — especially when delivered in writing. It should be catalogued as such.

Second, assemble a team dedicated to monitoring and handling reports of death threats. If local police lack jurisdiction over its platform, Meta must step up. Users who threaten harm should be identified and banned from platforms without delay. Moreover, in addition to enforcing its own community guidelines, the company should work with law enforcement to ensure that incidents are properly reported and local laws are upheld.

Third, perhaps the simplest and quickest action: under settings, privacy and messages, set the default to “don’t receive”. Receiving messages from others should be an opt-in setting, not an opt-out one. In his remarks at the CFR, Clegg mentioned that Meta employs nudge theory principles to steer users toward optimal practices. It took three days for me to learn that I had the choice to not receive message requests from people I don’t follow. At a minimum, “don’t receive” should be the default for users who have private accounts.

Speaking as someone whose life was upended by death threats on a Meta platform, I have one more ask of the company: Please move fast and fix things.

Sherry Hakimi is the founder and executive director of genEquality, an organization that promotes gender equality and inclusion.